What is artificial intelligence?

Artificial intelligence (AI) is a wide-ranging branch of computer science concerned with building smart machines capable of performing tasks that typically require human intelligence. While AI is an interdisciplinary science with multiple approaches, advancements in machine learning and deep learning, in particular, are creating a paradigm shift in virtually every sector of the tech industry.

Artificial intelligence allows machines to model, or even improve upon, the capabilities of the human mind. And from the development of self-driving cars to the proliferation of generative AI tools like ChatGPT and Google’s Bard, AI is increasingly becoming part of everyday life — and an area companies across every industry are investing in.

Artificial Intelligence Definition: Basics of AI

Understanding AI

Broadly speaking, artificially intelligent systems can perform tasks commonly associated with human cognitive functions — such as interpreting speech, playing games and identifying patterns. They typically learn how to do so by processing massive amounts of data, looking for patterns to model in their own decision-making. In many cases, humans will supervise an AI’s learning process, reinforcing good decisions and discouraging bad ones. But some AI systems are designed to learn without supervision — for instance, by playing a video game over and over until they eventually figure out the rules and how to win.

Strong AI Vs. Weak AI

Intelligence is tricky to define, which is why AI experts typically distinguish between strong AI and weak AI.

Strong AI

Strong AI, also known as artificial general intelligence, is a machine that can solve problems it’s never been trained to work on — much like a human can. This is the kind of AI we see in movies, like the robots from Westworld or the character Data from Star Trek: The Next Generation. This type of AI doesn’t actually exist yet.

The creation of a machine with human-level intelligence that can be applied to any task is the Holy Grail for many AI researchers, but the quest for artificial general intelligence has been fraught with difficulty. And some believe strong AI research should be limited, due to the potential risks of creating a powerful AI without appropriate guardrails.

In contrast to weak AI, strong AI represents a machine with a full set of cognitive abilities — and an equally wide array of use cases — but time hasn’t eased the difficulty of achieving such a feat.

Weak AI

Weak AI, sometimes referred to as narrow AI or specialized AI, operates within a limited context and is a simulation of human intelligence applied to a narrowly defined problem (like driving a car, transcribing human speech or curating content on a website).

Weak AI is often focused on performing a single task extremely well. While these machines may seem intelligent, they operate under far more constraints and limitations than even the most basic human intelligence.

Weak AI examples include:

- Siri, Alexa and other smart assistants

- Self-driving cars

- Google search

- Conversational bots

- Email spam filters

- Netflix’s recommendations

Machine Learning Vs. Deep Learning

Although the terms “machine learning” and “deep learning” come up frequently in conversations about AI, they should not be used interchangeably. Deep learning is a form of machine learning, and machine learning is a subfield of artificial intelligence.

Machine Learning

A machine learning algorithm is fed data by a computer and uses statistical techniques to help it “learn” how to get progressively better at a task, without necessarily having been specifically programmed for that task. Instead, ML algorithms use historical data as input to predict new output values. To that end, ML consists of both supervised learning (where the expected output for the input is known thanks to labeled data sets) and unsupervised learning (where the expected outputs are unknown due to the use of unlabeled data sets).

Deep Learning

Deep learning is a type of machine learning that runs inputs through a biologically inspired neural network architecture. The neural networks contain a number of hidden layers through which the data is processed, allowing the machine to go “deep” in its learning, making connections and weighting input for the best results.

Examples of Artificial Intelligence

The Four Types of AI

AI can be divided into four categories, based on the type and complexity of the tasks a system is able to perform. They are:

- Reactive machines

- Limited memory

- Theory of mind

- Self awareness

Reactive Machines

A reactive machine follows the most basic of AI principles and, as its name implies, is capable of only using its intelligence to perceive and react to the world in front of it. A reactive machine cannot store a memory and, as a result, cannot rely on past experiences to inform decision making in real time.

Perceiving the world directly means that reactive machines are designed to complete only a limited number of specialized duties. Intentionally narrowing a reactive machine’s worldview has its benefits, however: This type of AI will be more trustworthy and reliable, and it will react the same way to the same stimuli every time.

Reactive Machine Examples

- Deep Blue was designed by IBM in the 1990s as a chess-playing supercomputer and defeated international grandmaster Gary Kasparov in a game. Deep Blue was only capable of identifying the pieces on a chess board and knowing how each moves based on the rules of chess, acknowledging each piece’s present position and determining what the most logical move would be at that moment. The computer was not pursuing future potential moves by its opponent or trying to put its own pieces in better position. Every turn was viewed as its own reality, separate from any other movement that was made beforehand.

- Google’s AlphaGo is also incapable of evaluating future moves but relies on its own neural network to evaluate developments of the present game, giving it an edge over Deep Blue in a more complex game. AlphaGo also bested world-class competitors of the game, defeating champion Go player Lee Sedol in 2016.

Limited Memory

Limited memory AI has the ability to store previous data and predictions when gathering information and weighing potential decisions — essentially looking into the past for clues on what may come next. Limited memory AI is more complex and presents greater possibilities than reactive machines.

Limited memory AI is created when a team continuously trains a model in how to analyze and utilize new data or an AI environment is built so models can be automatically trained and renewed.

When utilizing limited memory AI in ML, six steps must be followed:

- Establish training data

- Create the machine learning model

- Ensure the model can make predictions

- Ensure the model can receive human or environmental feedback

- Store human and environmental feedback as data

- Reiterate the steps above as a cycle

Theory of Mind

Theory of mind is just that — theoretical. We have not yet achieved the technological and scientific capabilities necessary to reach this next level of AI.

The concept is based on the psychological premise of understanding that other living things have thoughts and emotions that affect the behavior of one’s self. In terms of AI machines, this would mean that AI could comprehend how humans, animals and other machines feel and make decisions through self-reflection and determination, and then utilize that information to make decisions of their own. Essentially, machines would have to be able to grasp and process the concept of “mind,” the fluctuations of emotions in decision-making and a litany of other psychological concepts in real time, creating a two-way relationship between people and AI.

Self Awareness

Once theory of mind can be established, sometime well into the future of AI, the final step will be for AI to become self-aware. This kind of AI possesses human-level consciousness and understands its own existence in the world, as well as the presence and emotional state of others. It would be able to understand what others may need based on not just what they communicate to them but how they communicate it.

Self-awareness in AI relies both on human researchers understanding the premise of consciousness and then learning how to replicate that so it can be built into machines.

Artificial Intelligence Examples

Artificial intelligence technology takes many forms, from chatbots to navigation apps and wearable fitness trackers. The below examples illustrate the breadth of potential AI applications.

ChatGPT

ChatGPT is an artificial intelligence chatbot capable of producing written content in a range of formats, from essays to code and answers to simple questions. Launched in November 2022 by OpenAI, ChatGPT is powered by a large language model that allows it to closely emulate human writing. ChatGPT also became available as a mobile app for iOS devices in May 2023 and for Android devices in July 2023. It is just one of many chatbot examples, albeit a very powerful one.

Google Maps

Google Maps uses location data from smartphones, as well as user-reported data on things like construction and car accidents, to monitor the ebb and flow of traffic and assess what the fastest route will be.

Smart Assistants

Personal AI assistants like Siri, Alexa and Cortana use natural language processing, or NLP, to receive instructions from users to set reminders, search for online information and control the lights in people’s homes. In many cases, these assistants are designed to learn a user’s preferences and improve their experience over time with better suggestions and more tailored responses.

Snapchat Filters

Snapchat filters use ML algorithms to distinguish between an image’s subject and the background, track facial movements and adjust the image on the screen based on what the user is doing.

Self-Driving Cars

Self-driving cars are a recognizable example of deep learning, since they use deep neural networks to detect objects around them, determine their distance from other cars, identify traffic signals and much more.

Wearables

The wearable sensors and devices used in the healthcare industry also apply deep learning to assess the health condition of the patient, including their blood sugar levels, blood pressure and heart rate. They can also derive patterns from a patient’s prior medical data and use that to anticipate any future health conditions.

MuZero

MuZero, a computer program created by DeepMind, is a promising frontrunner in the quest to achieve true artificial general intelligence. It has managed to master games it has not even been taught to play, including chess and an entire suite of Atari games, through brute force, playing games millions of times.

AI Benefits, Challenges and Future

Artificial Intelligence Benefits

AI has many uses — from boosting vaccine development to automating detection of potential fraud. AI companies raised $66.8 billion in funding in 2022, according to CB Insights research, more than doubling the amount raised in 2020. Because of its fast-paced adoption, AI is making waves in a variety of industries.

Safer Banking

Business Insider Intelligence’s 2022 report on AI in banking found more than half of financial services companies already use AI solutions for risk management and revenue generation. The application of AI in banking could lead to upwards of $400 billion in savings.

Better Medicine

As for medicine, a 2021 World Health Organization report noted that while integrating AI into the healthcare field comes with challenges, the technology “holds great promise,” as it could lead to benefits like more informed health policy and improvements in the accuracy of diagnosing patients.

Innovative Media

AI has also made its mark on entertainment. The global market for AI in media and entertainment is estimated to reach $99.48 billion by 2030, growing from a value of $10.87 billion in 2021, according to Grand View Research. That expansion includes AI uses like recognizing plagiarism and developing high-definition graphics.

Challenges and Limitations of AI

While AI is certainly viewed as an important and quickly evolving asset, this emerging field comes with its share of downsides.

The Pew Research Center surveyed 10,260 Americans in 2021 on their attitudes toward AI. The results found 45 percent of respondents are equally excited and concerned, and 37 percent are more concerned than excited. Additionally, more than 40 percent of respondents said they considered driverless cars to be bad for society. Yet the idea of using AI to identify the spread of false information on social media was more well received, with close to 40 percent of those surveyed labeling it a good idea.

AI is a boon for improving productivity and efficiency while at the same time reducing the potential for human error. But there are also some disadvantages, like development costs and the possibility for automated machines to replace human jobs. It’s worth noting, however, that the artificial intelligence industry stands to create jobs, too — some of which have not even been invented yet.

Future of Artificial Intelligence

When one considers the computational costs and the technical data infrastructure running behind artificial intelligence, actually executing on AI is a complex and costly business. Fortunately, there have been massive advancements in computing technology, as indicated by Moore’s Law, which states that the number of transistors on a microchip doubles about every two years while the cost of computers is halved.

Although many experts believe that Moore’s Law will likely come to an end sometime in the 2020s, this has had a major impact on modern AI techniques — without it, deep learning would be out of the question, financially speaking. Recent research found that AI innovation has actually outperformed Moore’s Law, doubling every six months or so as opposed to two years.

By that logic, the advancements artificial intelligence has made across a variety of industries have been major over the last several years. And the potential for an even greater impact over the next several decades seems all but inevitable.

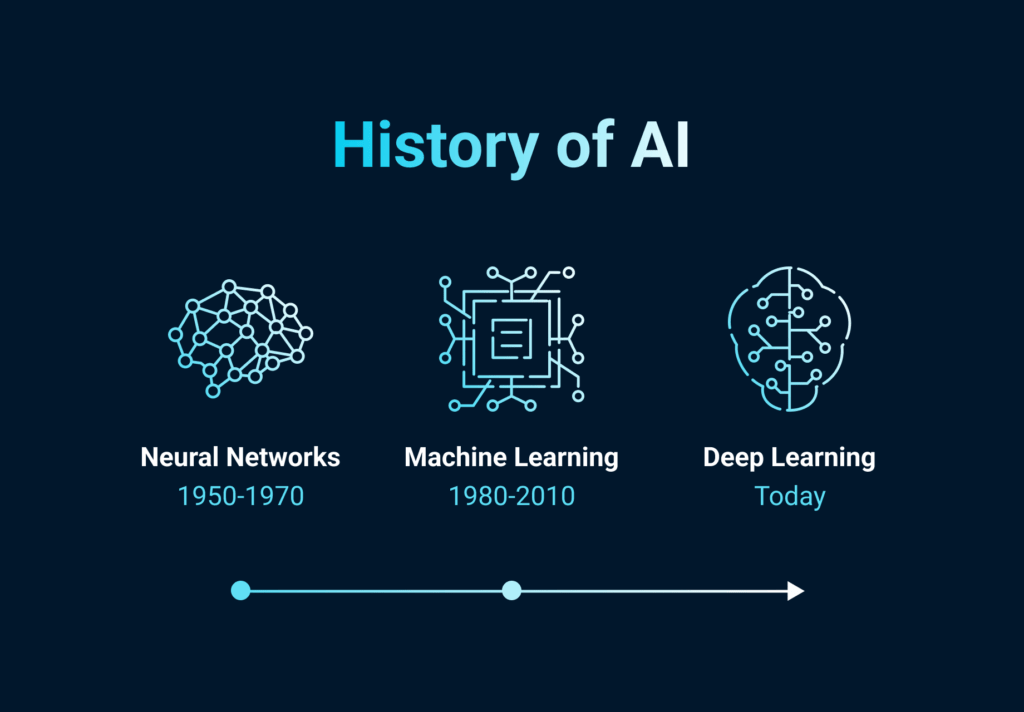

History of AI : A Timeline

History of AI

Intelligent robots and artificial beings first appeared in ancient Greek myths. And Aristotle’s development of syllogism and its use of deductive reasoning was a key moment in humanity’s quest to understand its own intelligence. While the roots are long and deep, the history of AI as we think of it today spans less than a century. The following is a quick look at some of the most important events in AI.

1940s

- (1942) Isaac Asimov publishes the Three Laws of Robotics, an idea commonly found in science fiction media about how artificial intelligence should not bring harm to humans.

- (1943) Warren McCullough and Walter Pitts publish the paper “A Logical Calculus of Ideas Immanent in Nervous Activity,” which proposes the first mathematical model for building a neural network.

- (1949) In his book The Organization of Behavior: A Neuropsychological Theory, Donald Hebb proposes the theory that neural pathways are created from experiences and that connections between neurons become stronger the more frequently they’re used. Hebbian learning continues to be an important model in AI.

1950s

- (1950) Alan Turing publishes the paper “Computing Machinery and Intelligence,” proposing what is now known as the Turing Test, a method for determining if a machine is intelligent.

- (1950) Harvard undergraduates Marvin Minsky and Dean Edmonds build SNARC, the first neural network computer.

- (1950) Claude Shannon publishes the paper “Programming a Computer for Playing Chess.”

- (1952) Arthur Samuel develops a self-learning program to play checkers.

- (1954) The Georgetown-IBM machine translation experiment automatically translates 60 carefully selected Russian sentences into English.

- (1956) The phrase “artificial intelligence” is coined at the Dartmouth Summer Research Project on Artificial Intelligence. Led by John McCarthy, the conference is widely considered to be the birthplace of AI.

- (1956) Allen Newell and Herbert Simon demonstrate Logic Theorist (LT), the first reasoning program.

- (1958) John McCarthy develops the AI programming language Lisp and publishes “Programs with Common Sense,” a paper proposing the hypothetical Advice Taker, a complete AI system with the ability to learn from experience as effectively as humans.

- (1959) Allen Newell, Herbert Simon and J.C. Shaw develop the General Problem Solver (GPS), a program designed to imitate human problem-solving.

- (1959) Herbert Gelernter develops the Geometry Theorem Prover program.

- (1959) Arthur Samuel coins the term “machine learning” while at IBM.

- (1959) John McCarthy and Marvin Minsky found the MIT Artificial Intelligence Project.

1960s

- (1963) John McCarthy starts the AI Lab at Stanford.

- (1966) The Automatic Language Processing Advisory Committee (ALPAC) report by the U.S. government details the lack of progress in machine translations research, a major Cold War initiative with the promise of automatic and instantaneous translation of Russian. The ALPAC report leads to the cancellation of all government-funded MT projects.

- (1969) The first successful expert systems, DENDRAL and MYCIN, are created at Stanford.

1970s

- (1972) The logic programming language PROLOG is created.

- (1973) The Lighthill Report, detailing the disappointments in AI research, is released by the British government and leads to severe cuts in funding for AI projects.

- (1974-1980) Frustration with the progress of AI development leads to major DARPA cutbacks in academic grants. Combined with the earlier ALPAC report and the previous year’s Lighthill Report, AI funding dries up and research stalls. This period is known as the “First AI Winter.”

1980s

- (1980) Digital Equipment Corporations develops R1 (also known as XCON), the first successful commercial expert system. Designed to configure orders for new computer systems, R1 kicks off an investment boom in expert systems that will last for much of the decade, effectively ending the first AI Winter.

- (1982) Japan’s Ministry of International Trade and Industry launches the ambitious Fifth Generation Computer Systems project. The goal of FGCS is to develop supercomputer-like performance and a platform for AI development.

- (1983) In response to Japan’s FGCS, the U.S. government launches the Strategic Computing Initiative to provide DARPA funded research in advanced computing and AI.

- (1985) Companies are spending more than a billion dollars a year on expert systems and an entire industry known as the Lisp machine market springs up to support them. Companies like Symbolics and Lisp Machines Inc. build specialized computers to run on the AI programming language Lisp.

- (1987-1993) As computing technology improved, cheaper alternatives emerged and the Lisp machine market collapsed in 1987, ushering in the “Second AI Winter.” During this period, expert systems proved too expensive to maintain and update, eventually falling out of favor.

1990s

- (1991) U.S. forces deploy DART, an automated logistics planning and scheduling tool, during the Gulf War.

- (1992) Japan terminates the FGCS project in 1992, citing failure in meeting the ambitious goals outlined a decade earlier.

- (1993) DARPA ends the Strategic Computing Initiative in 1993 after spending nearly $1 billion and falling far short of expectations.

- (1997) IBM’s Deep Blue beats world chess champion Gary Kasparov.

2000s

- (2005) STANLEY, a self-driving car, wins the DARPA Grand Challenge.

- (2005) The U.S. military begins investing in autonomous robots like Boston Dynamics’ “Big Dog” and iRobot’s “PackBot.”

- (2008) Google makes breakthroughs in speech recognition and introduces the feature in its iPhone app.

2010s

- (2011) IBM’s Watson handily defeats the competition on Jeopardy!.

- (2011) Apple releases Siri, an AI-powered virtual assistant through its iOS operating system.

- (2012) Andrew Ng, founder of the Google Brain Deep Learning project, feeds a neural network using deep learning algorithms 10 million YouTube videos as a training set. The neural network learned to recognize a cat without being told what a cat is, ushering in the breakthrough era for neural networks and deep learning funding.

- (2014) Google makes the first self-driving car to pass a state driving test.

- (2014) Amazon’s Alexa, a virtual home smart device, is released.

- (2016) Google DeepMind’s AlphaGo defeats world champion Go player Lee Sedol. The complexity of the ancient Chinese game was seen as a major hurdle to clear in AI.

- (2016) The first “robot citizen,” a humanoid robot named Sophia, is created by Hanson Robotics and is capable of facial recognition, verbal communication and facial expression.

- (2018) Google releases natural language processing engine BERT, reducing barriers in translation and understanding by ML applications.

- (2018) Waymo launches its Waymo One service, allowing users throughout the Phoenix metropolitan area to request a pick-up from one of the company’s self-driving vehicles.

2020s

- (2020) Baidu releases its LinearFold AI algorithm to scientific and medical teams working to develop a vaccine during the early stages of the SARS-CoV-2 pandemic. The algorithm is able to predict the RNA sequence of the virus in just 27 seconds, 120 times faster than other methods.

- (2020) OpenAI releases natural language processing model GPT-3, which is able to produce text modeled after the way people speak and write.

- (2021) OpenAI builds on GPT-3 to develop DALL-E, which is able to create images from text prompts.

- (2022) The National Institute of Standards and Technology releases the first draft of its AI Risk Management Framework, voluntary U.S. guidance “to better manage risks to individuals, organizations, and society associated with artificial intelligence.”

- (2022) DeepMind unveils Gato, an AI system trained to perform hundreds of tasks, including playing Atari, captioning images and using a robotic arm to stack blocks.

- (2022) OpenAI launches ChatGPT, a chatbot powered by a large language model that gains more than 100 million users in just a few months.

- (2023) Microsoft launches an AI-powered version of Bing, its search engine, built on the same technology that powers ChatGPT.

- (2023) Google announces Bard, a competing conversational AI.

- (2023) OpenAI Launches GPT-4, its most sophisticated language model yet.

Do you have any questions about preserving your privacy online and stopping apps from tracking you? Ask away in the comments section below!